Executive Summary

There has been a global transformation in technologies with the increased usage and development of Artificial Intelligence technologies. With technological companies and governments prioritising it, but also the general public gaining access to it – it is clear that AI is marking a new era of technology. Whilst the Global North sees it as the means for a utopian future, the Global South has found it to be a tool for weaponization. One way AI is being adapted is into mass surveillance systems, raising tensions about how this biometric data is obtained/utilised to infringe on the digital identities of minority groups (Weitzberg et al., 2021).The risks that this poses to human rights is significant, something that can only worsen if we do not begin to address the issues at hand. This policy brief will address AI’s role in mass surveillance systems, examining it through the at risk Palestinian lives in Occupied Palestinian Territories. Through this brief; the way in which these technologies impact lives will be addressed, what the principal concerns at hand are, and any immediate/long term actions that can be undertaken to prevent or minimise the effects of AI facial recognition in mass surveillance (Robinson et al., 2020).

Introduction

Overview of the Topic:

Mass surveillance is one of the biggest issues of the 21st century, in a world where private data has become something so public – there are no safe spaces. AI has fed into this in an accelerated manner. Those who are most vulnerable to it are the minority groups, whose data feeds these robots. Vulnerable groups have no methods to defend themselves from this, allowing for the exploitation of their rights to increase the global disparities and inequalities (Privacy International, 2022).

AI supported facial recognition softwares have significantly shaped the way in which Israeli military can control and violate Palestinian human rights. With Israel being one of the world leaders in the advancement of AI and a hub for technological corporations, there needs to be more legislation and monitoring of how they are utilising it. It is clear that it is being heavily used to maintain control of Palestinian people through surveillance and obtaining biometric data. What is clear is that this has become an extremely powerful tool in the violation of human rights for marginalised groups.

Aims of the Policy Brief: This policy brief aims to:

1. Highlight how artificial intelligence is being used for the mass surveillance of Palestinian people

2. Examine the specific human rights implications for these vulnerable groups

3. Propose policy recommendations to mitigate risks

Relevance and Urgency: Addressing the human rights implications of AI is urgent due to the rapid pace of AI adoption and its profound impact on already marginalised communities. Ensuring that AI technologies are used responsibly is crucial for promoting social justice and equitable development.

“In addition to the constant threat of excessive physical force and arbitrary arrest, Palestinians must now contend with the risk of being tracked by an algorithm, or barred from entering their own neighbourhoods based on information stored in discriminatory surveillance databases.”

Agnès Callamard, Amnesty International (2023b)

The Problem.

Case Study: How are AI Facial Recognition Surveillance Systems Impacting the Lives of Palestinians?

Israel has become prolific in its use of artificial intelligence to accelerate and increase the impact of its technologies within cybersecurity, surveillance, and advanced weaponry. This has allowed the state to become a global military force and leader in technology – with it being a hub for major players in the high-tech industry. However, Israel’s advancements with artificial intelligence have been allowing it to violate Palestinian people’s human rights even further within the occupied territory (Amnesty International, 2023). It is through their AI systems that they can use automated weaponry, facial recognition software, internet monitoring, and specialised military targeting, amongst other mediums, to put Palestinians at extreme risk.

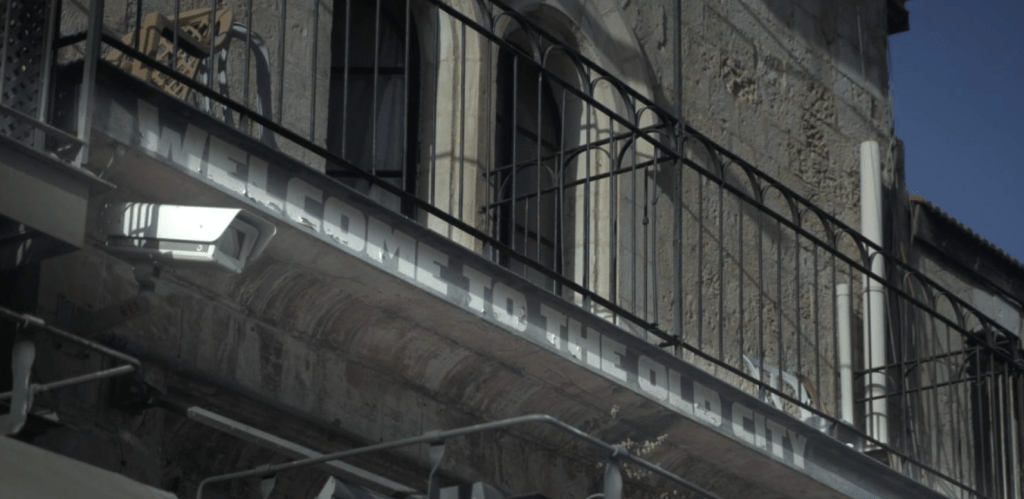

Whilst facial recognition software has been in growing use over the past few decades, artificial intelligence has marked the escalation of these methods – especially within Israel’s genocide of Palestine. Israeli authorities have been integrating it into Palestinian checkpoints to scan through the faces of all civilians that pass through. This allows the system to rapidly identify people, whilst detaining anyone who has been suspected of having ties with Hamas. These technologies have been recorded as sometimes incorrectly identifying individuals and giving Israeli authorities to abuse their position to detain innocent civilians. Simply through the facial scanning of individuals, it is already an invasion of human rights and data; as the biometric data that is being collected is entirely non consensual. If they were not already dehumanised, the use of these automated systems have further perpetuated them into this state. The collection of this data allows for easier mass surveillance which targets Palestinians and restricts their freedoms even further. At peak numbers, these programmes have identified 37,000 people as targets (Abraham, 2024) – a number which only continues to grow.

With the implementation of what is called ‘The Wolfpack System’; soldiers are encouraged to not only hide behind the decisions of the automated system, but to collect even more biometric data for these systems. What we see happening is that civilians are forced to give up their rights to pass through checkpoints that allow them to reach their homes and vital services. If the system flags them as being a ‘threat’, then the soldiers follow the protocol that follows that. It no longer matters whether they can identify the person as non-threatening or familiar, if the system declares them to be ‘dangerous’ then it grants them ‘permission’ to enforce a dangerous regime that violates human rights and lives. By using AI, soldiers are psychologically able to ‘free themselves’ from the decision making of whether someone is a threat or not, allowing them to no longer see Palestinians as people but rather as colours [green/safe, yellow/possible threat, red/dangerous], making it easier for them to enact crimes against humanity. There are also other systems that rank how likely someone is to be a part of Hamas ranging on a scale of 1 to 100 (Abraham, 2024b). Accountability can then be deferred to the automated system and becomes encouraged as an (unreliable) method of decision making. The protocols which would then be followed can range from ‘arbitrary’ searches to the separation of families to murders. These decisions are based on these automated systems, which bases the risk these people pose on their ethnicity amongst other biassed data sets. There is another level of gamification for the Israeli soldiers, as applications have scoreboards for those who kill the ‘most dangerous’. Gaza’s death toll has risen to over 39,145 (Ministry of Health, 2024) – with AI technologies being a major contributor to this high death toll.

What can be shown by this case study, is that Israel justifies their developments with artificial intelligence and facial recognition as a matter of public safety – however, the deployment of systems like ‘The Wolf Pack’ are already a blatant human rights violation that should not be used under any state against any group of people.

1. Privacy Violations:

Israel’s collection of biometric data is a direct privacy violation of Palestinian people. With no consent or ethical way to gain this knowledge, it is a clear breach of rights and a privacy violation.

2. Discrimination and Bias:

The mechanisms of the AI which is implemented can only function through perpetuating existing biases . It works by training itself on already distorted data, and will incorrectly identify people. There is evidence that the facial recognition software bases some of its ‘danger rating’ on racial features, showing a deep rooted discrimination in the politics of the artefact. When seeing that the algorithm is being put in use for ‘law enforcement’ (a display of coloniality moving forward with post-industrial era of technology), it shows that this has much higher stakes than in other technologies (Madianou, 2019).

3. Exacerbation of Inequalities:

With these technologies only being used against Palestinians, it increases the inequalities they already face. Having Israeli soldiers and military in control makes it so that their grip on these communities becomes much stronger. Palestinians have no knowledge of the data they have obtained and whether it is even accurate. It increases their divide within the digital sphere and real life, as both become extremely intertwined for these specific vulnerable groups.

4. Lack of Accountability:

It makes it easier for soldiers to shift accountability to the AI, as it is technically the one making the decisions. Furthermore, developers and the military cannot be held accountable to the fullest extent, as the functions of the AI systems are not made clear. We cannot be aware of the full extent of how these systems function and how they are being used.

6. Ethical Concerns:

The entire operation of Artificial Intelligence’s role in facial recognition technology is a huge ethical issue. Even in the most free countries in the world, the implementation of facial recognition in surveillance is an ethical concern; however when employed by an authoritarian state, it becomes a much bigger problem for the rights of the people.

Opportunities for Change: Recommendations

For Israel

The primary way to prevent this from continuing to occur would be through Israel to end their apartheid state and for the government to stop using AI mass surveillance software to obtain biometric data of Palestinian citizens. These mass surveillance systems are enforced by the Israeli military, therefore if the state ceased their crimes there would no longer be a need for them. This should then be followed by the safe disposal of Palestinian data that was gathered, as the leaking of this information is a serious risk.

For States that have Diplomatic Connections with Israel

Another direct approach to this would be for other states to impose more control over this situation. Initially, this could be achieved by condemning Israel’s actions and use of surveillance/military technology. Following this, as Israel has a large number of major technology companies based on their territory; countries that have jurisdiction over these companies should use this to ban them from contributing to any of these war crimes. This would then decrease Israel’s capacity to develop these systems any further and could lead to these companies moving out of this jurisdiction.

Access to the AI Facial Recognition Software / Implementation of Ethical AI Legislation

If legislation is developed that forces developers to be transparent about how these systems function and the data that is being collected, we can prevent any unethical technologies from being placed in use. This can help reform current laws around AI and develop any new legislation that is necessary. Through this transparency, we can hold corporations and states accountable for whatever crimes they are committing or attempting to commit. Actors which can be kept in mind for this are governments, international organisations, and tech corporations/companies.

Establish an Organisation or Watchdog Group that specialises in Palestinian Human Rights and AI

Another measure to reduce the effects that these technologies have is to establish dedicated watchdog groups that have specialist knowledge. This is a specific issue that needs to be under constant and consistent scrutiny. If there is a dedicated sector that specialises in the protection of vulnerable groups against AI systems [e.g Palestinian human rights and AI facial recognition surveillance software] then AI developers, private sector investors, and governments can refer to professionals if there is anything unethical and will know that they are being watched over as well. It points more attention to this growing issue; that way individuals, organisations, companies, etc. know who to contact and that somebody is aware of the ongoing issues. Pressure can be placed on those who are doing illegal activities, and information can be more accessible/standardised.

Spreading Awareness of AI/Palestine through a Global Educational Campaign

Part of the problem is a lack of global awareness about the issues at hand. Not only is there a lack of communication over problems in the Global South, but also the Global North. What is happening with AI is relevant all over the world, what is happening in Palestine is just an example of somewhere that is actively and obviously weaponizing it. Other states do it less obviously, making citizens from areas like Great Britain feel protected – when the reality is that these systems have been in place in the British police force for years (Home Office, 2023). What could be done is a campaign to spread awareness of what governments and corporations are doing with AI aided mass surveillance. When the general public is made aware of what is going on, they can take preventative measures to protect their data and privacy; but also can lead to external pressure for states like Israel to be stopped by its international counterparts. It can also initiate conversations about how similar technologies are beginning to be used in other countries, and can help be a caution for other states to control this sort of activity. These campaigns should be carried out by NGOs and international organisations.

Conclusion

Whilst AI becomes a looming concern for citizens across the world, it is affecting some more than others. Their progression and threats in different societies varies to different degrees. What we must address are the direct dangers that AI is actively having, such as its applications in facial recognition and surveillance. Though it may seem that these violations of human rights are targeted to a specific demographic now, it is our job to stop being bystanders to protect those who are victims now and those who will be victims in the future. Combining international support with the implementation of correct legislation and accountability, we can guide the progression of ethical AI technologies and give people their freedom back.

Abraham, Y. (2024a) ‘Lavender’: the AI machine directing Israel’s bombing spree in Gaza. [online] +972 Magazine. Available at: https://www.972mag.com/lavender-ai-israeli-army-gaza/

Abraham, Y. (2024b) Israel: Stop using biometric mass surveillance against Palestinians. [online] ARTICLE 19. Available at: https://www.article19.org/resources/israel-stop-using-biometric-mass-surveillance-against-palestinians/

Amnesty International (2023a) Israel and Occupied Palestinian Territories: Automated apartheid: How facial recognition fragments, segregates and controls Palestinians in the OPT. [online] Amnesty International. Available at: https://www.amnesty.org/en/documents/mde15/6701/2023/en/

Amnesty International (2023b) Israeli authorities using facial recognition to entrench apartheid. [online] Amnesty International. Available at: https://www.amnesty.org/en/latest/news/2023/05/israel-opt-israeli-authorities-are-using-facial-recognition-technology-to-entrench-apartheid/

Flyverbom, M., Madsen, A.K. and Rasche, A. (2017) ‘Big data as governmentality in international development: Digital traces, algorithms, and altered visibilities’, The Information Society, 33(1), pp. 35–42. doi:10.1080/01972243.2016.1248611

Home Office (2023) Police use of facial recognition: Factsheet – Home Office in the media. [online] homeofficemedia.blog.gov.uk. Available at: https://homeofficemedia.blog.gov.uk/2023/10/29/police-use-of-facial-recognition-factsheet/

Madianou, M. (2019) ‘Technocolonialism: Digital innovation and data practices in the humanitarian response to refugee crises’, Social Media + Society, 5(3), p. 2056305119863146. doi:10.1177/2056305119863146

Ministry of Health (2024) Gaza death toll rises to 39,145 as Israel kills 55 more Palestinians. [online] Middle East Monitor. Available at: https://www.middleeastmonitor.com/20240724-gaza-death-toll-rises-to-39145-as-israel-kills-55-more-palestinians/

Privacy International (2022) PI’s guide to international law and surveillance. [online] Privacy International. Available at: https://privacyinternational.org/report/4780/pis-guide-international-law-and-surveillance

Robinson, L., Schulz, J., Dunn, H.S., Casilli, A.A., Tubaro, P., Carvath, R., Chen, W., Wiest, J.B., Dodel, M., Stern, M.J., Ball, C., Huang, K.-T., Blank, G., Ragnedda, M., Ono, H., Hogan, B., Mesch, G.S., Cotten, S.R., Kretchmer, S.B. and Hale, T.M. (2020) ‘Digital inequalities 3.0: Emergent inequalities in the information age’, First Monday. doi:10.5210/fm.v25i7.10844

Weitzberg, K., Cheesman, M., Martin, A. and Schoemaker, E. (2021) ‘Between surveillance and recognition: Rethinking digital identity in aid’, Big Data & Society, 8(1), p. 20539517211006744. doi:10.1177/20539517211006744